Technical Lead – Data Engineering

Location – Hook, Hampshire, UK

Job Type – FTE/CTH

- 4+ years of experience in Azure Databricks with PySpark.

- 2+ years of experience in Databricks workflow & Unity catalog.

- 3+ years of experience in ADF (Azure Data Factory).

- 3+ years of experience in ADLS Gen 2.

- 3+ years of experience in Azure SQL.

- 5+ years of experience in Azure Cloud platform.

- 2+ years of experience in Python programming & package builds.

Job Description:

- Strong experience in implementing secure, hierarchical namespace-based data lake storage for structured/semi-structured data, aligned to bronze-silver-gold layers with ADLS Gen2. Hands-on experience with lifecycle policies, access control (RBAC/ACLs), and folder-level security. Understanding of best practices in file partitioning, retention management, and storage performance optimization.

- Capable of developing T-SQL queries, stored procedures, and managing metadata layers on Azure SQL.

- Comprehensive experience working across the Azure ecosystem, including networking, security, monitoring, and cost management relevant to data engineering workloads.Understanding of VNets, Private Endpoints, Key Vaults, Managed Identities, and Azure Monitor.Exposure to DevOps tools for deployment automation (e.g., Azure DevOps, ARM/Bicep/Terraform).

- Experience in writing modular, testable Python code used in data transformations, utility functions, and packaging reusable components.Familiarity with Python environments, dependency management (pip/Poetry/Conda), and packaging libraries.Ability to write unit tests using PyTest/unittest and integrate with CI/CD pipelines.

- Lead solution design discussions, mentor junior engineers, and ensure adherence to coding guidelines, design patterns, and peer review processes.Able to prepare Design documents for development and guiding the team technically. Experience preparing technical design documents, HLD/LLDs, and architecture diagrams.Familiarity with code quality tools (e.g., SonarQube, pylint), and version control workflows (Git).

- Demonstrates strong verbal and written communication, proactive stakeholder engagement, and a collaborative attitude in cross-functional teams.Ability to articulate technical concepts clearly to both technical and business audiences.Experience in working with product owners, QA, and business analysts to translate requirements into deliverables.

- Good to have Azure Entra/AD skills and GitHub Actions.

- Good to have orchestration experience using Airflow, Dagster, LogicApp.

- Good to have expereince working on event-driven architectures using Kafka, Azure Event Hub.

- Good to have exposure on Google Cloud Pub/Sub.

- Good to have experience developing and maintaining Change Data Capture (CDC) solutions preferrably using Debezium.

- Good to have hands-on experience on data migration projects specifically involving Azure Synapse and Databricks Lakehouse.

- Good to have eperienced in managing cloud storage solutions on Azure Data Lake Storage . Experience with Google Cloud Storage will be an advantage.

Working at WinWire

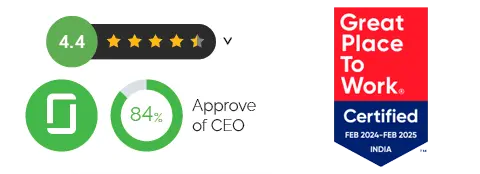

Our Culture Score

Awards

Microsoft Partner of the Year

Modernizing

Applications

Microsoft Partner of the Year

Migration To Azure

Microsoft Partner of the Year

Cloud Native

App Development

Microsoft Partner of the Year

Recognized with SDP

Preferred Partner Award