Module Lead

Experience – 9 to 14 Years

Job Description:

- 3+ years of experience in Azure Databricks with PySpark.

- 2+ years of experience in Databricks workflow & Unity Catalog.

- 2+ years of experience in ADF (Azure Data Factory),

- 2+ years of experience in ADLS Gen 2

- 2+ years of experience in Azure SQL.

- 3+ years of experience in Azure Cloud platform.

- 2+ years of experience in Python programming & package builds.

- Hands-on experience in designing and building scalable data pipelines using Databricks with PySpark, supporting batch and near-real-time ingestion, transformation, and processing. Ability to optimize Spark jobs and manage large-scale data processing using RDD/Data Frame APIs. Demonstrated expertise in partitioning strategies, file format optimization (Parquet/Delta), and Spark SQL tuning.

- Skilled in governing and managing data access for Azure Data Lakehouse with Unity Catalog. Experience in configuring data permissions, object lineage, and access policies with Unity Catalog. Understanding of integrating Unity Catalog with Azure AD, external megastores, and audit trails.

- Experience in building efficient orchestration solutions using Azure data factory, Databricks Workflows. Ability to design modular, reusable workflows using tasks, triggers, and dependencies. Skilled in using dynamic expressions, parameterized pipelines, custom activities, and triggers. Familiarity with integration runtime configurations, pipeline performance tuning, and error handling strategies.

- Good experience in implementing secure, hierarchical namespace-based data lake storage for structured/semi-structured data, aligned to bronze-silver-gold layers with ADLS Gen2. Hands-on experience with lifecycle policies, access control (RBAC/ACLs), and folder-level security. Understanding of best practices in file partitioning, retention management, and storage performance optimization.

- Capable of developing T-SQL queries, stored procedures, and managing metadata layers on Azure SQL

Comprehensive experience working across the Azure Cloud ecosystem, including networking, security, monitoring, and cost management relevant to data engineering workloads. - Experience in writing modular, testable Python code used in data transformations, utility functions, and packaging reusable components. Familiarity with Python environments, dependency management (pip/Poetry/Conda), and packaging libraries. Ability to write unit tests using PyTest/unittest and integrate with CI/CD pipelines.

- Able to prepare Design documents for development adopting code analyzers and unit testing frameworks.

Working at WinWire

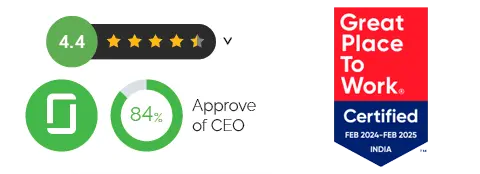

Our Culture Score

Awards

Microsoft Partner of the Year

Modernizing

Applications

Microsoft Partner of the Year

Migration To Azure

Microsoft Partner of the Year

Cloud Native

App Development

Microsoft Partner of the Year

Recognized with SDP

Preferred Partner Award